In a previous article, we looked at the reasoning behind why the FDA thought there was a need for a change of approach for many companies with their Computer System Validation (CSV). In this article we will look at how a more structured risk assessment approach will lead to greater computerised system rewards – and at a lower cost.

Fundamentally, the FDA had identified that too many companies had lost focus on what they were attempting to achieve with their CSV efforts and were too focused on producing documentation to support an audit.

This focus and subsequent costs were inhibiting the uptake on new technologies and therefore, rather than reducing risks, were increasing them.

Although you may read in other articles that CSA will replace CSV, this is not the FDA’s intent. They have clearly stated that the CSA approach is not a change to the GAMP methodology; but just a refocus on effort.

The FDA CSA guidance document is listed for draft release in September 2020, but a lot of ‘external guidance’ has already been published.

The initial talking point for CSA change was about testing. It was stated that many manufacturers spend 80% of their time on documenting and only 20% on actual testing. If we could reduce that time taken on documentation, then either:

- the overall time would be reduced, or

- we could spend MORE time on actual testing and still use the overall time

As many believe that CSV in its current form is not a value-added activity, there does seem to be a strong push for the first option above.

Different testing approaches have been suggested:

- scripted testing – using formally approved test scripts to produce documented evidence of the testing

- structured non-scripted testing – using test case intent to simply pass/fail functionality

- ad-hoc testing – performed without planning and documentation

Although technically we only need to know that a function works, the ad-hoc testing approach is a ‘hit or miss’ approach if not supported with some other formal testing. Time pressures, tester knowledge and other ‘agendas’ may all come into play to reduce ad-hoc testing effectiveness.

Also, as part of a CSA approach, there is growing push to do less testing based on a system being assessed as an ‘indirect impact’ system. That is, any system that does not have a direct impact on patient safety or product quality.

This is where the level of risk vs reward needs to be considered.

For many years, some companies have used the GAMP category of systems to dictate what documentation they produce and approach they take to validate that system.

In reality, the GAMP category and whether the system is a ‘direct’ or ‘indirect’ impact system are factors in the overall risk (and approach you should take), but it is too simple to say that these factors alone should dictate the effort you perform.

Factors such as size, complexity, usage, training, modification frequency, history, support, experience and, something that is missing in all this discussion - business risk, need to be considered.

Note that CSA has come about because of the perceived cost of CSV – but there is also a cost of failure (when doing too little).

So, by reducing your risk assessment scope simply to justify less testing, you may end up with a short-term gain – but no long-term reward.

However, by moving a large proportion of your scripted testing into structured non-scripted testing, and still allowing ad-hoc testing with efforts and outcomes noted, you will reduce your testing preparation and performance time whilst still assuring yourself that your system meets its requirements.

By applying a simple GAMP functional risk assessment on each requirement, you can justify what level of testing you perform.

The more knowledge you have on both your process and the software product automating it, the more accurate your risk assessment will be.

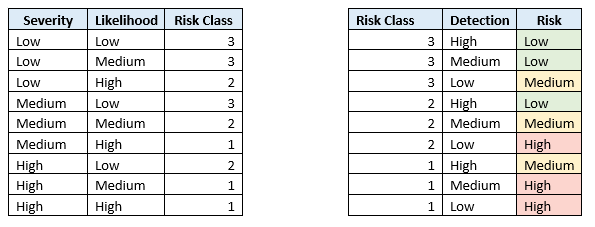

The well-documented GAMP 5.0 risk assessment approach is based on:

- the severity to the process if that function fails (low, medium, high)

- the probability of that process failing (lower if a standard function, higher if a complex function or configured/customised) (low, medium, high)

- the detectability of discovering that the function has failed before it reaches its severity (high, medium, low)

By assessing each test condition on a function, reduced test documentation effort can be justified.

High risk functions may require a more detailed script and possibly documented evidence, where medium and low risk functions may only require a noted pass/fail result or having witnessed the intent.

Even a low risk function error not found until a system is live is going to cost more than finding it in the test cycle. You are implicitly testing your system every day you use it, so why not at least confirm that any required function has been witnessed prior to this.

A CSA approach will definitely save time and cost in all CSV projects, but be aware that trying to over-simplify the ‘critical thinking’ approach can undo the CSA rewards.

In a future article, I will demonstrate this approach with a worked CSA example.

SeerPharma has successfully used the CSA approach and is helping companies to move towards this less burdensome approach to Computer Software Assurance.

Contact us if you would like to know more about CSA, and how these proposed changes may affect your organisation.

- Interested in reading our thoughts on CSA?

- Article 1 : Computer Software Assurance

- Article 2: Computer Software Assurance - Risk and Reward (this article)

- Article 3: Computer Software Assurance - Use of Critical Thinking

- Article 4: Computer Software Assurance - Configuration / Design and Closing the Loop